Anthropic has announced some updates to its Consumer Terms and Privacy Policy. It will start training its AI models if users choose to accept the new policies, but you can opt out of it.

Up until now, Claude AI did not train its language models on user data. This policy is changing, your chats with the AI will be used to improve Claude. The company says this helps strengthen its safeguards against scams, abuse and other harmful content. I guess every company is playing the security card to collect user data. This change does not affect existing chats (providing you have not accepted the new terms), however it will apply to new and resumed chats, coding sessions after accepting the terms.

Users have until September 28. 2025 to accept the new terms. Accepting the new policies now brings them into effect immediately. You can opt out of Claude's AI training from the Privacy Settings page, which is https://claude.ai/settings/data-privacy-controls

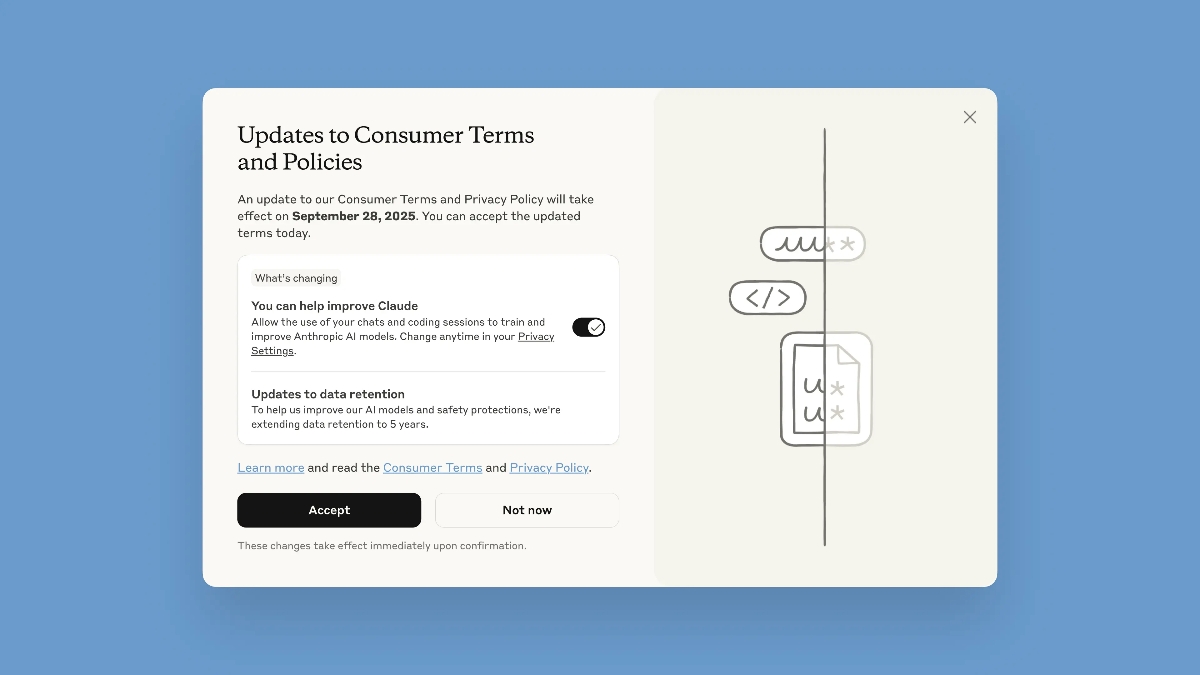

You will see a pop-up like the one in the screenshot, it has an option that says "You can help improve Claude". This toggle is enabled by default. Turn it off. Click on Accept to agree to the new terms. If you do not opt out before then, your data will be used to train the AI.

New users will be asked to make their choice when they sign up for an account. In addition to this, Anthropic will also store your data for a long period. The official wording says, "To help us improve our AI models and safety protections, we’re extending data retention to 5 years." That seems like an unusually long time to hold on to user data. The new terms say that the data isn't stored if users do not agree to provide their data for model training.

Anthropic says it does not sell user data to third-parties. As The Verge notes, Claude AI will filter or obfuscate sensitive data by using some tools and automated processes.

These changes to their privacy policy aren't exclusive to the Claude Free plan, they also apply to the Pro and Max plans. However, these rules do not apply to so services under "Anthropic's Commercial Terms, including Claude for Work, Claude Gov, Claude for Education, or API use, including via third parties such as Amazon Bedrock and Google Cloud’s Vertex AI".

It's never good to make privacy changes opt-out by default. I'm not entirely sure why there is a deadline for opting out.

Vivaldi says it won't add AI features to its browser, while a respected AI service has turned heel on privacy.

What do you think about this move by Anthropic?

Thank you for being a Ghacks reader. The post Anthropic's new policies will let it train its AI models on user data, unless you opt out appeared first on gHacks Technology News.

0 Commentaires